This lecture is part of the Machine Learning Masters program at the University of Tübingen. The course is run by the Autonomous Learning Group at the MPI for Intelligent Systems.

Dates:

Mo 16:15 - 17:45: virtual online lecture

Tue. 14:15 - 15:45: virtual recitation (starting on Nov 10th) Zoom link in ILIAS

Exam / project deadline: 16.03.2021 (see ILIAS and project description for details)

Course description:

The course will provide you with the theoretical and practical knowledge of reinforcement learning, a field of machine learning concerned with decision-making and interaction with dynamical systems, such as robots. We start with a brief overview of supervised learning and spend the most time on reinforcement learning. The exercises will help you get hands-on with the methods and deepen your understanding.

Qualification Goals:

Students gain an understanding of reinforcement learning formulations, problems, and algorithms on a theoretical and practical level. After this course, students should be able to implement and apply deep reinforcement learning algorithms to new problems.

People:

Instructor: Dr. Georg Martius

Teaching Assistants: Sebastian Blaes, Marin Vlastelica, Maximilian Seitzer

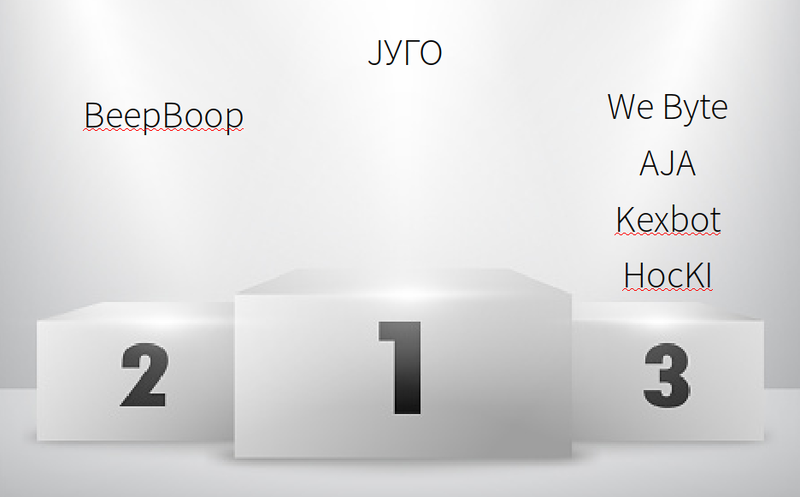

Final Project Tournament

We had great fun in the tournament. Thanks for your participation. 42 simultaneously active players and 300,000 games played. Many thanks to Sebastian Blaes for implementing and running the tournament server.

Course materials:

Both slides and exercises are available on ILIAS.

Lectures

- Lecture 1 Introduction and Neural Networks,

Background reading: C.M. Bishop Pattern Recognition and Machine Learning, Chap. 5 - Lecture 2 Imitation Learning,

- Lecture 3 The RL setup, Background reading: Sutton and Barto Reinforcement learning for the next few lectures (for this lecture, parts of Chapter 3)

- Lecture 4 MDPs, Background reading: Sutton and Barto Reinforcement learning Chapter 4

- Lecture 5 Model-free Prediction, Background reading: Sutton and Barto Reinforcement learning First part of Chapters 5, 6, 7, 12

- Lecture 6 Model-free Control, Background reading: Sutton and Barto Reinforcement learning Chapters 5.2, 5.3, 5.5, 6.4, 6.5, 12.7

- Lecture 7: Value Function Approximation, Background reading: Sutton and Barto Reinforcement learning Chapters 9.1-9.8, 10.1, 10.2, 11.1-11.3. Supplementary: DQN paper 1, paper 2, NFQ paper

- Lecture 8: Policy Gradient. Background reading: Sutton and Barto Reinforcement learning Chapters 13

- Lecture 9: Policy Gradient and Actor-Critic; Background reading: Natural Actor Critic Paper, TRPO Paper, PPO Paper

- Lecture 10: Q-learning style Actor-Critic; Background reading: DPG Paper, DDPG Paper, TD3 Paper

- Lecture 11: Model-based Methods: Dyna-Q, MBPO; Background reading: Sutton and Barto Reinforcement learning Chapters 8 and the MBPO Paper

- Lecture 12: Model-based Methods II: Online-Planning: CEM, PETS, iCEM; Background reading: PETS paper, iCEM paper (videos)

- Lecture 13: Alpha Go and Recent work from my group (Blackbox solver differentiation); Background reading: AlphaGo paper (also in Ilias, because behind the paywall), AlphaZero Paper Blackbox differentiation

- Lecture 14: Exploration and Intrinsic Motivation with recent work from my group; Background reading: Sutton and Barto Reinforcement learning Chapter 2, HER paper, Control what you can paper

- Feb. 22nd: Summary and Q&A

Exercises:

See ILIAS!

Related readings:

- Sutton & Barto, Reinforcement Learning: An Introduction

- Bertsekas, Dynamic Programming and Optimal Control, Vol. 1

- Bishop, Pattern Recognition and Machine Learning